Send LLM-Prompts with a Click via a Browser Extension

This extension allows you to trigger your repetitive prompts with the click of a button. Choose your favorite LLM API, set your prompts, and run them on every tab directly in the browser.

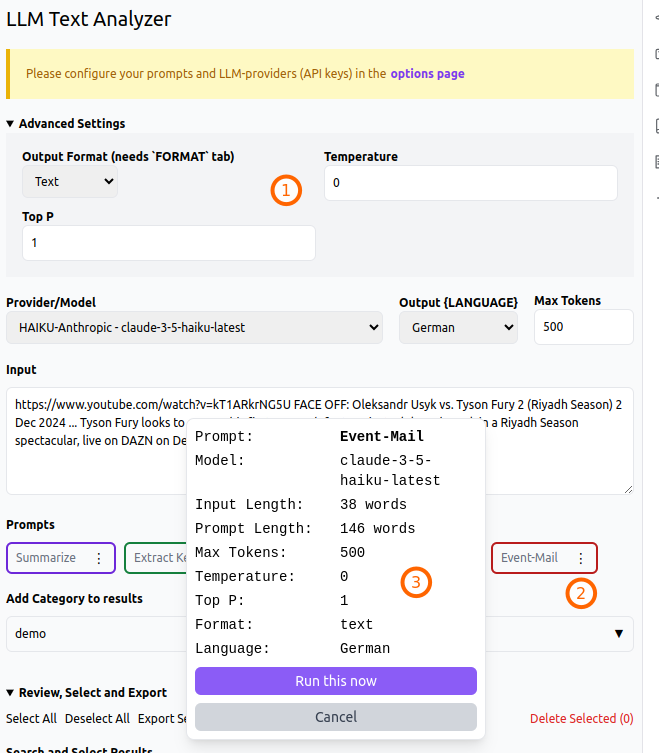

Example: Transform a search result into an event invitation email with just one click.

- On the search results page, select the desired text and send it to the extension sidebar using the context menu.

- Review your input and choose the appropriate model parameters.

- Click on your prompt, which in this case is ‘Event Mail.’

- See the result in the sidebar

What is this for?

This extension allows you to store and manage prompts. It opens a sidebar with an input field and displays your predefined prompts as buttons. This allows you to fire the prompts with a simple click.

Why?

Over the past year, I have recognized that the places where I collect prompt templates have grown. Some are stored on the servers of LLM providers, some in archives or history of the various GenAI software tools, others in project folders or within other documents. Therefore, I wanted to have a more centralized place to store the prompts I use most frequently or certain collections of them. This would allow me to test them in the field (internet), adjust them as needed, compare models, compose examples for future prompts, etc.

Download

Its not in chrome store yet but you can try it with “load unpacked” and “Developer Mode” on from the “chrome://extensions”.

Find working demo on Github.

Be aware: Browser Extensions can access your data.

This extension stores data in your browser (chrome.storage.local). All your data keeps local and No data is sent to any server.

Interested in source? Let me know via E-Mail.

Its made with react, typescript, tailwind.

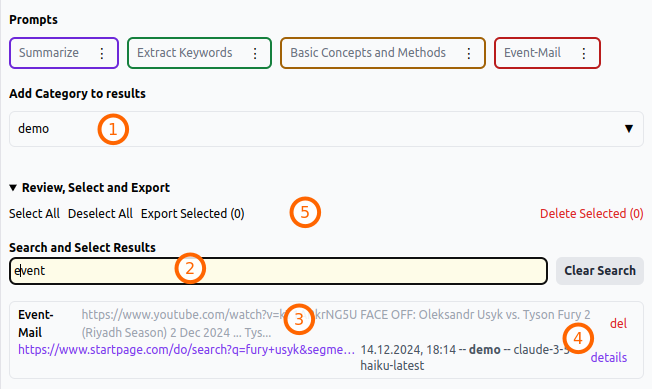

Demo: History, Search, Prompt, Save, Share

- Add tags or categories to your history entries

- Search in your history

- a history entry with info about user/agent conversation, and some meta info like the page, the date, the tags

- functionality to view details and delete the history entry

- selected entries can be exported as JSON or deleted in bulk

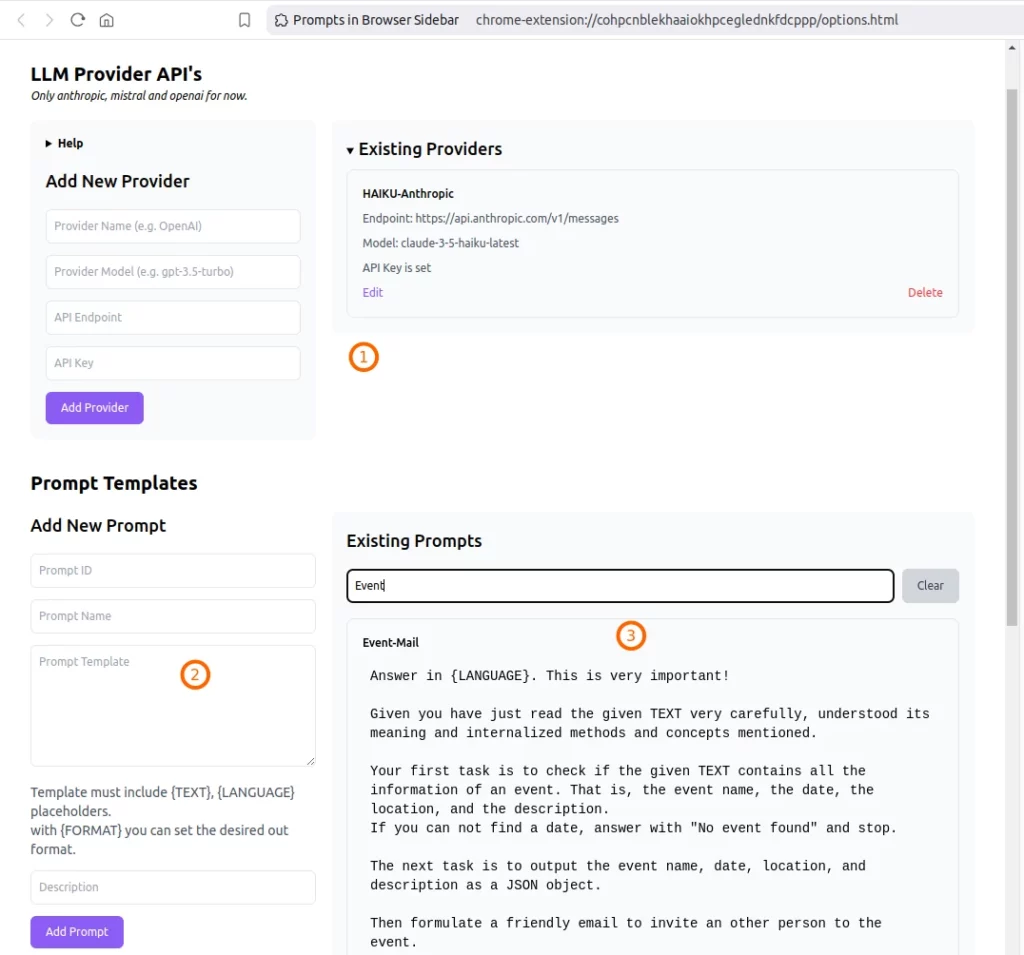

LLM Provider and Prompt Template Library

- Add Providers. Yes you need API keys for this.

- Add Prompt Templates.

- Search in Prompt Templates

- not in screen but you can import/export your settings as JSON.

LLM Paramters and Prompt Infos

You can set typical LLM params like temperature, top_p (1) and inspect your prompts before requesting the LLM (2,3)